100x Increase in SOLR Performance and Throughput

April 27, 2009

Is your SOLR installation running slower than you think it should? Performance, throughput and scalability not what you are expecting or hoping? Do you constantly see that others have much higher SOLR query performance and scalability than you do? All it might take to fix your woes is a simple schema or query change.

Is your SOLR installation running slower than you think it should? Performance, throughput and scalability not what you are expecting or hoping? Do you constantly see that others have much higher SOLR query performance and scalability than you do? All it might take to fix your woes is a simple schema or query change.

The following scenario I am about to describe is proof positive that you should always take the time to understand the underlying functionality of whatever operating system, programming language or application you are using. Let my oversight and ‘quick fix solution’ be a lesson to you, it is almost always worth the upfront cost of doing something right the first time so you don’t have to keep revisiting the same issue.

Read the rest of this entry »

There and Back Again, an EC2 MySQL Cluster

January 26, 2009

Limitations of EC2 as a web platform:

- Price– An m1.xlarge instance will run you ~$600 with data transfer costs. Managed hosting solutions run cheaper especially if you plan on purchasing in bulk. The grid is designed for on-demand computation and not as a cost efficient web services.

- Configuration– There are a limited number of options and you will not be able to tailor the hardware to your application. Databases over 10GB of size will have performance issues since that is the memory cap.

- Network storage– The primary disks offers limited storage, additional volumes will need to be attached across the network and at an additional cost.

- Software – No hardware based solutions for load balancing or custom application servers. The model is software driven so all needs must be met with a software solution. In a managed hosting or collocation solution you will at least have the option of adding additional hardware and having a private network. No dedicated switching, routers, firewall, or load balancers.

EC2 might be right for you if:

- Distribution Awareness– Your application was designed to scale horizontally from the get-go and you can take advantage of grid computing.

- Research and Development– EC2 & Rightscale will allow for you to bring up new servers, test configuration, and scale quickly. If you are not sure what your hardware demands will be or the scope of the project it will allow for some flexibility to get this right before committing to rather lengthy contracts with other hosting options.

- Disaster Recovery– If you need an off-site mirror for your site that you can keep dormant and activate as needed.

Over the past several months I have been doing extensive development using Amazon’s EC2 as my hardware infrastructure. I was tasked with taking CitySquares.com from a New England area hyper-local search and business directory to a national site in a few months. Due to the memory limitations of EC2 instances, m1.xlarge only providing 15GB the jump from a 15GB database to anything larger becomes very costly. When everything was able to be contained in two servers in a master/slave environment we were able to provide redundancy, performance, and easy management when working with the database. The final estimations of the national roll out would put our core data at 50GB. Far too large for any one EC2 instance. Going to disk was not an option as everything works off of EBS attached storage and that any disk writes means traveling over the network. An additional overhead which degrades performance even more when switching off RAM. Then, there was also the nature of the data, which means that at any page load, any piece of data could be requested.

With OS overhead, index storage, and ndb overhead, each x1.mlarge instance gave about 12GB of usable storage. Include replication, and it comes down to 6GB of storage per node. To store a ~50GB database that I had planned on requires 8 storage nodes, two management servers, and two mysqld api servers. This is where it became important to understand the advantages of vertical scaling versus horizontal scaling. EC2 provided fast horizontal scaling and configuration. Servers can be launched on demand and their configurations scripted. While I appreciated that aspect of cloud computing and being able to bring that many servers up and configure each one relatively quickly I really just needed two decent boxes with 64GB of RAM in each and a master/slave setup. The operations costs for the cluster was $6000/month, a hefty bill considering I could buy all the hardware needed to run the cluster in just a few months of paying for EC2.

Twelve servers later and a working cluster we were able to successfully roll out our MySQL Cluster with minimal performance loss. A lot of it was due to tweaking every query top to bottom. The site runs on a Drupal core, which meant a lot of the queries were not designed with distribution awareness both from code in house and the core. This was another added growing pain since the network overhead of running 12 servers on shared resources, with mediocre latency, and throughput limitations was amplifying flaws in the database design and every poorly designed query and join degraded performance significantly.

To give credit where credit is due, EC2 did allow us to scale the site up rather quickly. We were able to test server configurations, new applications, and have easy management. It would not have been possible for us to push out the data, handle influx of new traffic, and expand as fast as we had without it. Long term however, it made absolutely no sense that once we were finished scaling up, to stay on EC2. It is a great platform for start-ups to be able to configure and launch servers for their service or application and grow rapidly.

As a database platform, until EC2 offers more configuration options in it’s hardware and the ability to increase memory, the cap of 15GB will make EC2 problematic for any database that plans on growing past that. It is important to understand your application and database needs before considering EC2. It is no surprise to me that even with the RightScale interface, and easy management of EC2 web sites are reluctant to switch off their own hardware or managed hosting.

Both Sun and Continuent are pursuing MySQL Clustering on cloud computing. As of this post, Continuent for EC2 was still in closed beta and testing and Sun is doing their own research into offering more support for database clusters on compute clouds. Maybe in the future this can be something to revisit, but it would require more ndb configuration options on the network layer to cope with shared bandwidth and additional hardware configurations by Amazon (someday).

The 6.4 release which is in beta now offers new features which would make MySQL Clustering more attractive on cloud computing architecture:

- Ability to add nodes and node groups online. This will allow the database to scale up without taking the cluster down.

- Data node multithreading support. The m1.xlarge instance comes with 4 cores.

If you are setting up a MySQL cluster, the following resources will help get you up and running quickly:

The Limitations of Scaling with EC2

October 8, 2008

Just as with any platform you choose, EC2 has its own limitations as well. These limitations are often different and harder to overcome than what you might find while running your own hardware. Without the proper planning and development, these limitations can wind up being extremely detrimental to the well being and scalability of your website or service.

There are quite a few blogs, articles and reviews out there that mention all the positive aspects of EC2 and I have written a few of them myself. However, I think users need to be informed of the negative aspects of a particular platform as well as the positive. I will be brief with this post as my next will focus on designing an architecture around these limitations.

The biggest limitations of Amazon’s EC2 at the moment as I have experienced, are the latencies between instances, latencies between instances and storage (local, and EBS), and a lack of powerful instances with more than 15GB of RAM and 4 virtual CPUs.

All the latency issues can all be traced back to the same root cause, a shared LAN with thousands of non localized instances all competing for bandwidth. Normally, one would think a LAN would be quick… and they generally are, especially when the servers are sitting right next to each other with a single switch sitting in between them. However, Amazon’s network is much more extensive than most local LANs and chances are your packets are hitting multiple switches and routers on their way from one instance to another. Every extra node added between instances is just another few milliseconds that get added to the packet’s round trip time. You can think of Amazon’s LAN as a really small Internet. The layout of Amazon’s LAN is very similar to that of the Internet, there is no cohesiveness or localization of instances in relation to one another. So lots of data has to go from one end of the LAN to the other, just like on the Internet. This leads to data traveling much farther than it needs to and all the congestion problems that are found on the Internet can be found on Amazon’s LAN.

For computationally intensive tasks this really isn’t too big a deal but for those who rely on speedy database calls every millisecond added per request really starts adding up if you have lots of requests per page. When the CitySquares site moved from our own local servers to EC2 we noticed a 4-10x increase in query times which we attribute mainly to the high latency of the LAN. Since our servers are no longer within feet of each other, we have to contend with longer distances between instances and congestion on the LAN.

Another thing to take into consideration is the network latency for Amazon’s EBS. For applications that move around a lot of data, EBS is probably a god send as it has a high bandwidth capability. However, in CitySquares’ case, we wind up doing a lot of small file transfers to and from our NFS server as well as EBS volumes. So while there is a lot of bandwidth available to us, we can’t really take advantage of it, especially since we have to contend with the latency and overhead of transferring many small files. Not only are small files an issue for us but we also run our MySQL database off of an EBS volume. Swapping to disk has always been a critical issue for databases but the added overhead of network traffic can wreak havoc on your database load much more than normal disk swapping. You can think of the difference in access times from disk to disk over a network as a book on a bookcase vs a book somewhere down the hall in storage room B. Clearly the second option would take far longer to find what you are looking for and that’s what you have to work with if you want to have the piece of mind of persistent storage.

The last and most important limitation for us at CitySquares was the lack of an all powerful machine. The largest instance Amazon has to offer is one with just 15GB of ram and 4 virtual CPUs. In a day and age where you can easily find machines with 64GB of RAM and 16 CPUs, you are definitely limited by Amazon. In our case, it would be much easier for us just to throw hardware at our database to scale up but the only thing we have at our disposal is a paltry 15GB of RAM. How can this be the biggest machine they offer? Instead of dividing one of those machines in quarters just give me the whole thing. It just seems ludicrous to me that the largest machine they offer is something not much more powerful than the computer I’m using right now.

Long story short, just because you start using Amazon’s AWS doesn’t mean you can scale. Make sure your architecture is tolerant of higher latencies and can scale with lots of little machines because that’s all you have to work with.

Running your own hardware Vs EC2 and RightScale — Part 2

September 16, 2008

This week I’ve been reminded of a very important lesson… No matter how abstracted you are from your hardware, you still inherently rely on its smooth and consistent operation.

This past week CitySquares‘ NFS server went down for the count and was completely unresponsive to any type of communication. In fact, the EC2 instance was so FUBAR we couldn’t even terminate it from our RightScale dashboard. A post on Amazon’s EC2 board was required to terminate it. Turns out the actual hardware our instance was running on had a catastrophic failure of some sort. Otherwise, at least so I’m told, server images are usually migrated off of machines running in a degraded state automatically.

Needless to say, the very reasons for deciding against running our own hardware have come back to plague us. Granted we weren’t responsible for replacing the hardware but we were still affected by the troublesome machine. We weren’t just slightly affected by the loss of our NFS server either. Since we are running off of a heavily modified Drupal CMS our web servers depend on having a writable files directory. As it turned out Apache just spun waiting for a response from the file system, our web services ground to a halt waiting on a machine that was never going to respond… ever. Talk about a single point of failure! A non critical component, serving mainly images and photos managed to take down our entire production deployment.

This event has prompted us to move forward with a rewrite of Drupal’s core file handling functionality. The rewrite will include automatically directing file uploads to a separate domain name like csimg.com or something similar. Yahoo goes into more detail with their performance best practices. However, editing the Drupal core is generally frowned upon and heavily discouraged since it usually conflicts with the upgrade path and maintainability of the Drupal core becomes much more difficult. While we haven’t stayed out of the Drupal core entirely, the changes we have made are minor and only for performance improvements. I believe it is possible to stay out of the core file handling by hooking into it with the nodeapi but it seems like more trouble than its worth.

The idea behind the file handling rewrite is to serve our images and photos directly from our Co-Location while keeping a local files directory on each EC2 instance for non user committed things like CSS and JS aggregation caching among other simple cache related items coming from the Drupal core. This rewrite will allow us to run one less EC2 instance, saving us some money as well as remove our dependence on a catastrophic single point of failure.

For the time being we have set up another NFS server. This time based on Amazon’s new EBS product. I spoke about this in a previous post. One of the issues we had when the last NFS server went down was the loss of user generated content. Once the instance went down all the storage associated with that instance went down with it. There was no way to recover from the loss, it was just gone. This is just one of the many possible problems you can run into with the cloud. While on the pro side, you don’t have to worry about owning your own hardware, the con side is you cant recover from failures like you can with your own hardware. This is a very distinct difference and should be seriously considered before dumping your current architecture for the cloud.

Running your own hardware Vs. EC2 and RightScale

August 20, 2008

A couple weeks ago I began working with EC2 and RightScale in preparation of our big IT infrastructure change over. Ill start by giving a brief overview of our hardware infrastructure. Currently we’re running the CitySquares’ website on our own hardware in a Somerville co-location not too far from our headquarters in Boston’s trendy South End neighborhood.

From the very beginning our contract IT guy set us up with a extremely robust and flexible IT infrastructure. It consists of a few machines running Xen Hypervisors with Gentoo as the main host OS. Running Gentoo allows us to be as efficient as possible by specifically optimizing and compiling only the things we need. While this is a good step, it is Xen that really makes the big difference. It allows us to trade around resources as we see fit, more memory here, more virtual CPUs there, all can be done on the fly. For a startup or any company with limited resources this is rather essential. You never know where you are going to need to allocate resources in the months to come.

While this is all well and good, we are still limited when it comes to scaling with increasing traffic or adding additional resource intensive features. We have a set amount of available hardware and adding more is an expensive upfront capital investment. Not only that but in order for us to really begin to take advantage of Xen and use it to its full potential we were presented with an expensive option, it required the purchase of a SAN and more servers. For those in the industry I don’t think I need to mention that these get expensive in a hurry. This would have been a huge upfront cost for us, one we didn’t want to budget for. The second option, which is the one we eventually went with was to drop our current hardware solution and make the plunge into cloud computing with Amazon’s EC2.

Here I am now. A couple of weeks into the switch with a lot of lessons learned. There are definitely pros and cons for each platform, either going with EC2 or rolling your own architecture. Before I get into the details I want to make clear that there are many factors involved in choosing a technology platform. I am only going to scratch the surface, touching upon the major pros and cons with respect to my own opinions with best interest for CitySquares in mind.

Let me begin by starting with the pros for running your own hardware:

-

The biggest pro is most definitely persistence across reboots. I can not stress the importance of this one. You really take for granted the ability to edit a file and expect it to be there the next time the machine is restarted.

-

You only need to configure the software once. Once its running you don’t really care what you did to make it work. It just works, every time you reboot.

- UPDATE 8/21/08: Amazon releases persistent storage.

-

-

Complete and utter control over everything that is running. This extends from the OS to the amount of RAM, CPU specs, hard drive specs, NICs, etc. The ability to have a economy or performance server is all up to you.

-

Rather stable and unchanging architecture. Server host keys stay the same, the same number of servers are running today as there were yesterday and as there will be tomorrow.

-

Reboot times. For those times when something is just AFU you can hit the reset button and be back up and running in a few minutes.

-

You can physically touch it… Its not just in the cloud somewhere.

Some cons for running your own hardware:

-

Companies with limited resources usually end up with architectures that exhibit single points of failure.

-

As an aside, you can be plagued by hardware failures at any time. This usually is accompanied by angry emails, texts and calls at 3am on Saturday morning.

-

-

Limited scalability options. For a rapidly expanding and growing website, the couple weeks it takes to order and install new hardware can be detrimental to your potential traffic and revenue stream.

-

Management of physical pieces of hardware. Its a royal pain to have to go to a co-location to upgrade or fix anything that might need maintenance. Not to mention the potential down time.

-

Also, there are many hidden costs associated with IT maintenance.

-

-

Up front capital expenditures can be quite costly. This is especially true from a cash flow perspective.

-

Servers and other supporting hardware are rendered obsolete every few years requiring the purchase of new equipment.

These pros and cons for running your own hardware are pretty straight forward. Some people might mention managed hosting solutions which would mostly eliminate some of the cons related to server maintenance and hardware failures. However, this added service comes with an added price tag for the hosting. Whether it is right for you or your company is something to look into. We decided to skip this intermediary solution and go straight to the latest and greatest solution which is cloud computing. To be specific we sided with Amazon’s EC2 (Elastic Compute Cloud) using RightScale as our management tool.

Some of the pros for using EC2 in conjunction with the RightScale dashboard are as follows:

-

Near infinite resources (Server instances, Amazon’s S3 Storage, etc) available nearly instantaneously. No more Slashdot DoS attacks if everything is properly configured and set to introduce more servers automatically. (RightScale Benefit)

-

No upfront costs, everything is usage based. In the middle of the night if you are only utilizing one server thats all you pay for. Likewise, if during peak hours you’re running twenty servers you pay for those twenty servers. (Amazon Benefit, RightScale is a monthly service)

-

No hardware to think of. If fifty servers go down at Amazon we wont even know about it. No more angry calls at 3am. (Amazon Benefit)

-

Multiple availability zones. This allows us to run our master database in one zone which is completely separate from our slave database. So if there is an actual fire or power outage in one zone the others will theoretically be unaffected. The single points of failure mentioned before are a thing of the past and this is just one example. (Amazon Benefit)

-

Ability to clone whole deployments to create testing and development environments that exactly mirror the current production when you need them. (RightScale Benefit)

-

Security updates are taken care of for the most part. RightScale provides base server images which are customized upon boot with the latest software updates. (RightScale Benefit)

-

Monitoring and alerting tools are very good and highly customizable. (RightScale Benefit)

Some of the cons for using EC2 and RightScale:

-

No persistence after reboot. I can’t stress this one enough! All local changes will be wiped and you’ll start with a blank slate!

-

All user contributed changes must be backed up to a persistent storage medium or they will be lost! We back up incrementally every 15 minutes with a full backup every night.

- UPDATE 8/21/08: Amazon releases persistent storage.

-

-

Writing scripts to configure everything upon boot is a time consuming and tedious process requiring a lot of trial and error.

-

Every reboot takes approximately 10-20 minutes depending on the number and complexity of packages installed on boot. Making the previous bullet point even that much more painful.

-

A few of the pre-configured scripts are written quite well. The one for MySQL is as good as they get. You upload a config file complete with special tags for easy on the fly regular expression customization. The Apache scripts on the other hand are about as bad as they get. Everything must be configured after the fact.

-

With Apache however, you’ll be writing regular expressions to match other regular expressions. Needless to say is a royal pain and you usually end up with unreadable gibberish.

-

So there you have it, take it as you wish. For CitySquares, EC2 and RightScale were the best options. It allows us to scale nearly effortlessly once configured. It is also a much cheaper option up front where as owning your own hardware is generally cheaper in the long run. We did trade a lot of the pros of owning your own hardware to get the scalability and hardware abstraction of EC2. It was a tough decision for us to switch away from our current architecture but in the end it will most likely be the best decision we’ve made. The flexibility and scalability of the EC2 and RightScale platform are by far the biggest advantages to switching and in the end its what CitySquares needs.

Digging into HAProxy

August 13, 2008

Well its been a few weeks since my last posting here and there is certainly a good reason for that. Every once in a while I just need to completely unplug from technology. So it only made sense for me to go away on vacation to the middle of no where up in Maine’s great north woods for a couple of weeks. No computers, no cellphones, no towns, no people, just dirt logging roads, lakes, rivers, wildlife and trees. Now that I’m back and caught up I will begin to start posting regularly again.

Getting back to reality, as the title states, this post will focus on the reasons behind using HAProxy as well as a little bit on CitySquare’s implementation of the load balancer. Let me start by quoting a description of HAProxy from their website:

“HAProxy is a free, very fast and reliable solution offering high availability, load balancing, and proxying for TCP and HTTP-based applications. It is particularly suited for web sites crawling under very high loads while needing persistence or Layer7 processing. Supporting tens of thousands of connections is clearly realistic with todays hardware. “

While the high availability aspect of HAProxy is all well and good, everything is expected to be high availability these days. Any sort of downtime has become unacceptable even in the middle of the night. This is especially true when relying on search engine driven traffic. I’ve noticed that search engines like Google and Yahoo to name a couple, really ramp up their crawl rate in the wee hours of the morning. The crawl rate is boosted more so on weekend nights when even fewer people are searching the web and the search engines can allocate more of its resources towards web crawls. CitySquares has certainly been subject to DoS attacks by GoogleBot on Friday nights.

This is where the load balancing aspect of HAProxy comes into play, it is one of the main reasons for choosing it as our front facing service. With just a couple HAProxy servers we can maintain redundancy while having a nearly unlimited pool of Apache web servers to hand off requests to. We don’t need any special front facing, load balancing hardware to act as a single point of failure. We can also keep some money in our pocket at the same time by utilizing a software solution. Luckily, HAProxy is open source and free to the world, licensed under the GPL v2.

Not only does HAProxy handle our load balancing but it also serves as a central access point for DNS purposes. This solution is certainly much better than our current DNS round robin which is limited in its own right. Is this common sense? Probably, but I figured it was worth pointing out.

Lastly, security is always a concern for heavily trafficked and high profile sites. The developer behind HAProxy has been very proactive with the program architecture and coding practices and as such HAProxy can claim it’s never had a single known vulnerability in over five years. Since all front facing applications are subject to attacks from so many different sources these days, having a stable and secure application is a godsend when it comes to any sort of security related IT maintenance.

As far as implementation goes, I suspect that eventually we might need to move the HAProxy instances onto their own dedicated servers as traffic increases. In the meantime, with EC2, we are running them in parallel with Apache on the same servers. This is purely a cost savings measure as every server instance started with EC2 results in more cash out the door. As it is, HAProxy is incredibly fast and lean and really doesn’t consume much in the way of system resources, either CPU load or memory utilization.

There are certainly other reasons for choosing HAProxy but they are past of the scope of this post. I encourage everyone to take a serious look at HAProxy when spec’ing out a load balancer or proxy.

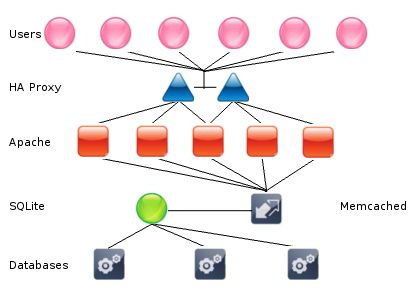

In my last post I mentioned the numerous technologies which were on tap for the upcoming version of CitySquares. This installment will continue to define an overview of the underlying architecture and begin to dig a little deeper into the actual implementation of the technologies. The idea and focus of this new architecture is aimed at creating a much more stable and scalable platform for us to work with. Before I get into the details you’ll see Ive provided a graphic representation of how the architecture will be laid out.

Bear with me as I explain the work flow behind this graphic as it is not 100% clear from the visual representation. First off, I run Ubuntu Linux which is great for just about everything I need, except for creating any sort of graphics, so I apologize in advance for the lackluster graphic. As you can see, there are a few different layers: users, HA Proxy, Apache, Memcached, SQLite and finally MySQL labeled as databases.

First and foremost are our beloved users, which whom without we would have no need for a website. Starting from the beginning, the users request a page from CitySquares, from there their request is passed through one of two HA Proxy servers. The sole purpose of these two machines is to load balance the incoming requests among all our Apache web servers and serve as a failsafe for one another. Once the user’s request has been accepted and forwarded along to Apache we actually begin to process the request.

The Apache servers run PHP and XCache modules. The PHP part I feel is fairly straight forward and out of the scope of this post so I will skip that part of the architecture. XCache however, is used in conjunction with and is an enhancement to PHP. More specifically XCache is an opcode optimizer and cache. It works by removing the compilation time of PHP scripts by caching the compiled and optimized state of the PHP scripts directly in the shared memory of the Apache server. This compiled version can increase page generation times by up to 500%, speeding up overall response time and reducing server load.

Just as with all dynamic websites most if not all the actual data is stored in databases. Gone are the days of flat files with near zero processing required. Databases are the new workhorses of the web world and as such usually become the bottle neck of the overall system. CitySquares is in a somewhat unique position, nearly all our page loads have quite a bit of location and distance based processing and nearly all of this is done in our MySQL database. So while our Apache servers are sitting idle waiting for responses from their queries, the DB is preforming the brunt of the work calculating distances between objects and the like.

We can reduce this bottleneck in a couple of different ways, the first of which is object caching. We will use Memcached to cache objects returned from the database. Say for example, we know the distance between two businesses. We know with a fair amount of certainty that those two businesses are going to be in the same place they were an hour ago, just as they were a week ago and as they will be a day from now. So we can cache this information with an expiration time of a couple days, thus saving ourselves the expense of calculating the distance between them on every page load. Of course if a user comes by and changes the location of one of these businesses, we can expire the object in cache and replace it with a newly calculated object straight from the database on the subsequent page load. These expensive queries require large table scans and mathematical formulas calculations on every row. These query results can be cached to free up the database and allow it to do what it does best. Store and retrieve data.

In the case where we cant find the data in Memcached, either because it doesn’t yet exist or has expired we will turn to our databases. We must first query a SQLite instance which is the gate keeper between Apache and the numerous databases we have. By having a separate lookup table we can essentially divide and parcel out our data sets on a table by table basis even down to an entry by entry basis. Depending on the type of data we are requesting SQLite will provide us with the location of one database or another to query for our data.

One could argue that this just adds another layer of latency and they would be correct. However, as scalability becomes an issue you will find that adding database replication generally results in diminishing returns. As new servers are brought online the overhead associated with replicating writes across all the replicated servers becomes choking and creates its own bottleneck. On the other hand, with a lookup table and a horizontal database architecture we don’t have to worry about database replication nearly as much. You can just as easily divide your data sets into different databases. Now how you go about this varies greatly depending on your data. For CitySquares the solution turns out to be rather simple. Everything we do is location specific so it only makes sense that each data set is only as big as its parent city. Theoretically every city and all the data related to said city could reside in its own database. As you can probably guess we are only performance limited by the biggest cities, Manhattan, Brooklyn, etc. In these few cases we can always fall back to bigger and better servers and or replication if necessary.

Just as our database has become a bottleneck in our current site, our search engine is also one as well, just to a lesser extent. We can take the lessons learned from our horizontal database architecture and apply it to the search engine architecture as well. By dividing our data sets into logical partitions we can keep our data from getting too large and unwieldy; And with these smaller data sets we can reduce or remove all together the overhead associated with replicating data over multiple machines.

While this solution sounds great, it won’t be worth the effort if every time a programmer wanted to access some data they would be required to check Memcached, then SQLite and then finally MySQL for every query. In order for this to be feasible from a programmers standpoint the programmer should never have to think about this underlying architecture. This of course I will discuss in greater detail in the upcoming installments. Stay Tuned.

Turning the page — PHP, Symfony, ORM

July 18, 2008

I have come to the conclusion that I should be cataloging my work, thoughts, theories and activities for others to read and learn from my experiences as a web engineer. Let me begin by mentioning I work at a company called CitySquares and for the last year I have been working diligently on the current CitySquares site.

This has been a great year for me as I was given the opportunity to learn the inner workings of the Drupal CMS. While Drupal is a great CMS/Framework, it is inherently still a prepackaged CMS designed for things that 99% of the community needs. CitySquares unfortunately falls within that other 1%. I must say that we have accomplished quite a bit using Drupal’s community modules in conjunction with our own custom written ones. However, there are plans in the works that we would like to implement but just cant within the Drupal framework.

Although all is not lost. With the current iteration running and stable and gaining traffic every week I have the opportunity to turn the page and begin work on the next phase of development. This is an exciting time and I will use this medium to convey the successes as well as the issues as development here continues.

That said, we have decided to scrap our Drupal based architecture in favor of a more extensible framework, Symfony. Symfony is a PHP based OO architecture that resembles Ruby on Rails. Not only will we gain the benefit of switching to a OO style framework but we will be using Doctrine as our ORM and Smarty as our template engine.

The idea is that this combination of technologies will help us alleviate two of the major problems we have with Drupal, essentially scalability and codability. Ive been toying with some ideas to help eliminate these two thorns in our side that I will discuss at a later time but look forward to hearing my ideas on a full stack horizontal architecture.